Monolithic vs. Microservice Architecture: A Performance and Scalability Evaluation

Status:: 🟩

Links:: Microservices vs. Monolith

Metadata

Authors:: Blinowski, Grzegorz; Ojdowska, Anna; Przybylek, Adam

Title:: Monolithic vs. Microservice Architecture: A Performance and Scalability Evaluation

Publication Title:: "IEEE Access"

Date:: 2022

URL:: https://ieeexplore.ieee.org/document/9717259/

DOI:: 10.1109/ACCESS.2022.3152803

Blinowski, G., Ojdowska, A., & Przybylek, A. (2022). Monolithic vs. Microservice Architecture: A Performance and Scalability Evaluation. IEEE Access, 10, 20357–20374. https://doi.org/10.1109/ACCESS.2022.3152803

Context. Since its proclamation in 2012, microservices-based architecture has gained widespread popularity due to its advantages, such as improved availability, fault tolerance, and horizontal scalability, as well as greater software development agility. Motivation. Yet, refactoring a monolith to microservices by smaller businesses and expecting that the migration will bring benefits similar to those reported by top global companies, such as Netflix, Amazon, eBay, and Uber, might be an illusion. Indeed, for systems that do not have thousands of concurrent users and can be scaled vertically, the benefits of such migration have not been sufficiently investigated, while the existing evidence is inconsistent. Objective. The purpose of this paper is to compare the performance and scalability of monolithic and microservice architectures on a reference web application. Method. The application was implemented in four different versions, covering not only two different architectural styles (monolith vs. microservices) but also two different implementation technologies (Java vs. C#.NET). Next, we conducted a series of controlled experiments in three different deployment environments (local, Azure Spring Cloud, and Azure App Service). findings. The key lessons learned are as follows: (1) on a single machine, a monolith performs better than its microservice-based counterpart; (2) The Java platform makes better use of powerful machines in case of computation-intensive services when compared to.NET; the technology platform effect is reversed when non-computationally intensive services are run on machines with low computational capacity; (3) vertical scaling is more cost-effective than horizontal scaling in the Azure cloud; (4) scaling out beyond a certain number of instances degrades the application performance; (5) implementation technology (either Java or C#.NET) does not have a noticeable impact on the scalability performance.

Notes & Annotations

Color-coded highlighting system used for annotations

📑 Annotations (imported on 2024-03-25#10:34:10)

One of the pioneers of microservices was Netflix which began moving away from its monolithic architecture in 2009 when the term microservice did not even exist. The term was coined by a group of software architects in 2011 and officially announced a year later at the 33rd Degree Conference in Kraków [6]. However, it did not start gaining in popularity until 2014, when Lewis and Fowler published their blog on the topic [6], while Netflix shared their expertise from a successful transition [9] paving the way for other companies.

[6] J. Lewis and M. Fowler. (Mar. 2014). Microservices: A Definition of This New Architectural Term. [Online]. Available: https://www.Martinfowler.com/articles/microservices.html

[9] A. Cockroft. (Aug. 2004). Migrating to Microservices. [Online]. Available: https://youtu.be/1wiMLkXz26M

Inspired by success stories of tech giants, many small companies or startups are considering joining the trend and are adopting microservices as a game changer. They expect that it will help them improve scalability, availability, maintainability, and fault tolerance of deployed applications [26], [27] (which have been reported as difficult to achieve in IT systems [28], [29]). Yet, microservice-based applications come with their own challenges, including:

- identifying optimal microservice boundaries [30], [31];

- orchestration of complex services (the complexity of microservices applications is pushed from the components to the integration level [15], [23]); - maintaining data consistency and transaction management across microservices [15], [19], [32], [33];

- the difficulty in understanding the system holistically [7], [27];

- increased consumption of computing resources [7], [11], [17]. For small scale systems, these challenges may outweigh the benefits [27].

[7] C. Posta, Microservices for Java Developers: A Hands-on Introduction to Frameworks Containers. Newton, MA, USA: O’Reilly Media, 2016.

[11] J. Soldani, D. A. Tamburri, and W.-J. Van Den Heuvel, ‘‘The pains and gains of microservices: A systematic grey literature review,’’ J. Syst. Softw., vol. 146, pp. 215–232, Dec. 2018.

[15] P. Di Francesco, P. Lago, and I. Malavolta, ‘‘Architecting with microservices: A systematic mapping study,’’ J. Syst. Softw., vol. 150, pp. 77–97, Apr. 2019.

[17] M. Jayasinghe, J. Chathurangani, G. Kuruppu, P. Tennage, and S. Perera, ‘‘An analysis of throughput and latency behaviours under microservice decomposition,’’ in Web Engineering, M. Bielikova, T. Mikkonen, and C. Pautasso, Eds. Cham, Switzerland: Springer, 2020, pp. 53–69.

[19] M. Viggiato, R. Terra, H. Rocha, M. Tulio Valente, and E. Figueiredo, ‘‘Microservices in practice: A survey study,’’ 2018, arXiv:1808.04836.

[26] D. Taibi, V. Lenarduzzi, and C. Pahl, ‘‘Processes, motivations, and issues for migrating to microservices architectures: An empirical investigation,’’ IEEE Cloud Comput., vol. 4, no. 5, pp. 22–32, Sep. 2017.

[27] J. Ghofrani and D. Lübke, ‘‘Challenges of microservices architecture: A survey on the state of the practice,’’ in Proc. ZEUS, 2018, pp. 1–8.

[28] S. Butt, S. Abbas, and M. Ahsan, ‘‘Software development life cycle & software quality measuring types,’’ Asian J. Math. Comput. Res., vol. 11, no. 2, pp. 112–122, 2016.

[29] A. Jarzębowicz and P. Marciniak, ‘‘A survey on identifying and addressing business analysis problems,’’ Found. Comput. Decis. Sci., vol. 42, pp. 315–337, Dec. 2017.

[30] S. Li, H. Zhang, Z. Jia, Z. Li, C. Zhang, J. Li, Q. Gao, J. Ge, and Z. Shan, ‘‘A dataflow-driven approach to identifying microservices from monolithic applications,’’ J. Syst. Softw., vol. 157, Nov. 2019, Art. no. 110380.

[31] H. Stranner, S. Strobl, M. Bernhart, and T. Grechenig, ‘‘Microservice decompositon: A case study of a large industrial software migration in the automotive industry,’’ in Proc. 15th Int. Conf. Eval. Novel Approaches Softw. Eng., 2020, pp. 498–505.

[32] M. Bruce and P. A. Pereira, Microservices in Action. New York, NY, USA: Simon and Schuster, 2018.

[33] A. Banijamali, P. Kuvaja, M. Oivo, and P. Jamshidi, ‘‘Kuksa: Self-adaptive microservices in automotive systems,’’ in Product-Focused Software Process Improvemen, M. Morisio, M. Torchiano, and A. Jedlitschka, Eds. Cham, Switzerland: Springer, 2020, pp. 367–384.

The most significant advantage of the monolithic architecture is its simplicity – in comparison to distributed applications of various genres, monolithic ones are much easier to test, deploy, debug and monitor. All data is retained in one database with no need for its synchronization; all internal communication is done via intra-process mechanisms. Hence it is fast and does not suffer from problems typical to inter-process communication (IPC). The monolithic approach is a natural and first-choice approach to building an application – all logic for handling requests runs in a single process.

It is worth mentioning that the microservice communication paradigm differs significantly from approaches based on Service Oriented Architecture (SOA) [39] such as Enterprise Service Bus (ESB), which include sophisticated and ‘‘heavy-weight’’ facilities of message routing, filtering, and transformation. The microservice approach favors: ‘‘smart endpoints and dumb pipes’’ [3]. There is no standard for communication or transport mechanisms for microservices. Microservices communicate with each other using well-standardized lightweight internet protocols, such as HTTP and REST [5], or messaging protocols, such as JMS or AMQP.

[3] M. Fowler. Microservice Premium. Accessed: May 30, 2020. [Online]. Available: https://Martinfowler.com/bliki/MicroservicePremium.html

[5] B. Terzić, V. Dimitrieski, S. Kordić, G. Milosavljević, and I. Luković, ‘‘Development and evaluation of microbuilder: A model-driven tool for the specification of rest microservice software architectures,’’ Enterprise Inf. Syst., vol. 12, nos. 8–9, pp. 1034–1057, 2018.

[39] A. Poniszewska-Marańda, P. Vesely, O. Urikova, and I. Ivanochko, ‘‘Building microservices architecture for smart banking,’’ in Advances in Intelligent Networking and Collaborative Systems, L. Barolli, H. Nishino, and H. Miwa, Eds. Cham, Switzerland: Springer, 2020, pp. 534–543.

Microservice-based applications scale well horizontally, not only in the technical sense, but also concerning the organization’s structuring of developer teams, which can be kept smaller and more agile [5], [22], [44], [45].

[22] A. Poniszewska-Marańda and E. Czechowska, ‘‘Kubernetes cluster for automating software production environment,’’ Sensors, vol. 21, no. 5, p. 1901, 2021.

[44] V. Lenarduzzi and O. Sievi-Korte, ‘‘On the negative impact of team independence in microservices software development,’’ in Proc. 19th Int. Conf. Agile Softw. Develop., Companion, New York, NY, USA, May 2018, pp. 1–4.

[45] F. Ramin, C. Matthies, and R. Teusner, ‘‘More than code: Contributions in scrum software engineering teams,’’ in Proc. IEEE/ACM 42nd Int. Conf. Softw. Eng. Workshops, New York, NY, USA, Jun. 2020, pp. 137–140.

In this work, we focus on application performance IPC required between microservice components introduces substantial overhead when compared to intra-process communication (function calls and method invocations) used in monolithic applications. IPC is implemented as an operating system’s kernel service. In most cases, it requires data copying between user and kernel space, and hence it reduces (in many cases significantly) application performance. Applications that do not service significant user traffic will show degraded throughput and response times when migrated from monolithic to a distributed architecture. Only as the user request increases, proper scaling of a microservice-based application can outweigh communication overhead – studying of this phenomenon is, in fact, the major topic of our work.

Scalability is the property of a system to handle a growing amount of work by adding resources to the system [55]. The manner in which additional resources are added defines which of two scaling approaches is taken [8], [14], [56], [57] - vertical scaling or horizontal scaling. The former, also known as scaling up, refers to adding more resources (CPU, memory, and storage) to an existing machine. It is the more straightforward approach, but it is limited by the most powerful hardware available on the market [56].

[8] R. Rajesh, Spring Microservices. London, U.K.: Packt, 2016.

[14] A. Kwan, J. Wong, H.-A. Jacobsen, and V. Muthusamy, ‘‘Hyscale: Hybrid and network scaling of dockerized microservices in cloud data centres,’’ in Proc. IEEE 39th Int. Conf. Distrib. Comput. Syst. (ICDCS), Dec. 2019, pp. 80–90.

[55] A. B. Bondi, ‘‘Characteristics of scalability and their impact on performance,’’ in Proc. 2nd Int. Workshop Softw. Perform., New York, NY, USA, 2000, pp. 195–203.

[56] B. Wilder, Cloud Architecture Patterns: Using Microsoft Azure. Newton, MA, USA: O’Reilly Media, 2012.

[57] L. Lu, X. Zhu, R. Griffith, P. Padala, A. Parikh, P. Shah, and E. Smirni, ‘‘Application-driven dynamic vertical scaling of virtual machines in resource pools,’’ in Proc. IEEE Netw. Oper. Manage. Symp. (NOMS), May 2014, pp. 1–9.

In contrast, horizontal scaling, also known as scaling out, refers to adding more machines and distributing the workload. It is more complex because it has an influence on the application architecture, but can offer scales that far exceed those that are possible with vertical scaling [56]. Horizontal scaling is more common with microservice applications [36], even though a monolith may be also scaled out by running many instances behind a load-balancer [6].

Nevertheless, scaling out a monolithic application may not be so effective because it commonly offers a lot of services - some of them more popular than others. In order to increase the availability of a monolithic application, the entire application needs to be replicated. This leads to over scaling in non-popular services, which consume server resources even when they are idle, and in effect results in sub-optimal resource utilization [59]. On the other hand, in order to increase the availability of a microservice application, only highly demanded microservices that consume a large amount of server resources will get more instances [18].

[18] F. Auer, V. Lenarduzzi, M. Felderer, and D. Taibi, ‘‘From monolithic systems to microservices: An assessment framework,’’ Inf. Softw. Technol., vol. 137, Dec. 2021, Art. no. 106600.

[59] T. Ueda, T. Nakaike, and M. Ohara, ‘‘Workload characterization for microservices,’’ in Proc. IEEE Int. Symp. Workload Characterization (IISWC), Sep. 2016, pp. 1–10.

In [59] Ueda et al. analyzed the behavior of an application implemented as a microservice and monolithic variant for two popular language runtimes – Node.js and Java Enterprise Edition (EE) using Acme Air benchmark suite and Apache JMeter for performance data collection. Additionally, tests were conducted both for native process and Docker container deployments. Throughput and cycles per instruction (CPI) were used as performance metrics; the test environment was equivalent to a private cloud deployment. The authors observed a significant overhead in the microservice architecture – on the same hardware configuration the performance of the microservice model was 79% lower with respect to the monolithic model. The microservice model spent a more significant amount of time in runtime libraries to process one client request than the monolithic model, namely – 4.22x on a Node.js application server and by 2.69x on a Java EE application server.

@Ueda.etal.2016.WorkloadCharacterizationMicroservices

Our Benchmarking Application Is Implemented In Four Functionally Identical Versions, Covering Not Only Two Different Architectural Styles (monolith And Microservices) But Also Two Leading Technologies Intended For The Development Of Server-side Software:

- Java – Implemented In Java 8 With Spring Boot Frame- Work 2.3.0,

- .NET – Implemented In C# Version 8 With Asp.net Core Framework 3.1.

All versions of our application expose two REST endpoints that return serialized JSON objects as shown on figure 1. The REST endpoints correspond to two services: • City service – simulates a simple single object query, the input contains city name string, while response contains city data (id, name, state and population) • Route service – simulates computationally intensive query, the output contains a path (an ordered series of points) being the shortest route between 10,000 randomly chosen points, each time the route is computed by the heuristic algorithm of the traveling salesman class.

As the performance measure, we have used throughput, which is calculated by JMeter as the number of requests processed by the server, divided by the total time in seconds to process the requests. The time is measured from the start of the first request to the end of the last request. This includes any intervals between requests, as it is supposed to represent the load on the server.

Throughput is the most commonly used measure in similar works – see for example [42], [59]. Other measures include response time and cycles per instruction, CPI) but throughput is best suited when we also want to compare the overall costs of running the application in a given technology and configuration.

To gather performance data, we used the following test procedure: the City service was invoked 1000 times, the Route service was invoked 100 times, the number of threads was set to 10 for both scenarios. Each of the test runs was repeated 20 times.

Since the cloud environments do not necessarily guarantee stable performance due to many factors related to network stability, virtual server availability, etc. – to compensate for various unexpected variations, we repeated each test series five times, computed the median of the results, and finally, we chose the test with the median being the median of obtained medians of all test runs. The validity of such an approach is discussed in ‘‘Software Microbenchmarking in the Cloud’’ work by Laaber et al. [67].

[67] C. Laaber, J. Scheuner, and P. Leitner, ‘‘Software microbenchmarking in the cloud. How bad is it really?’’ Empirical Softw. Eng., vol. 24, no. 4, pp. 2469–2508, Aug. 2019.

Note that when testing the performance of the microservicebased application, only one service was running. This is a common approach when it comes to horizontal scaling of microservices – each popular microservice gets its own virtual machines according to the associated load [18], [68].

[18] F. Auer, V. Lenarduzzi, M. Felderer, and D. Taibi, ‘‘From monolithic systems to microservices: An assessment framework,’’ Inf. Softw. Technol., vol. 137, Dec. 2021, Art. no. 106600.

[68] M. R. López and J. Spillner, ‘‘Towards quantifiable boundaries for elastic horizontal scaling of microservices,’’ in Proc. 10th Int. Conf. Utility Cloud Comput., New York, NY, USA, 2017, pp. 35–40.

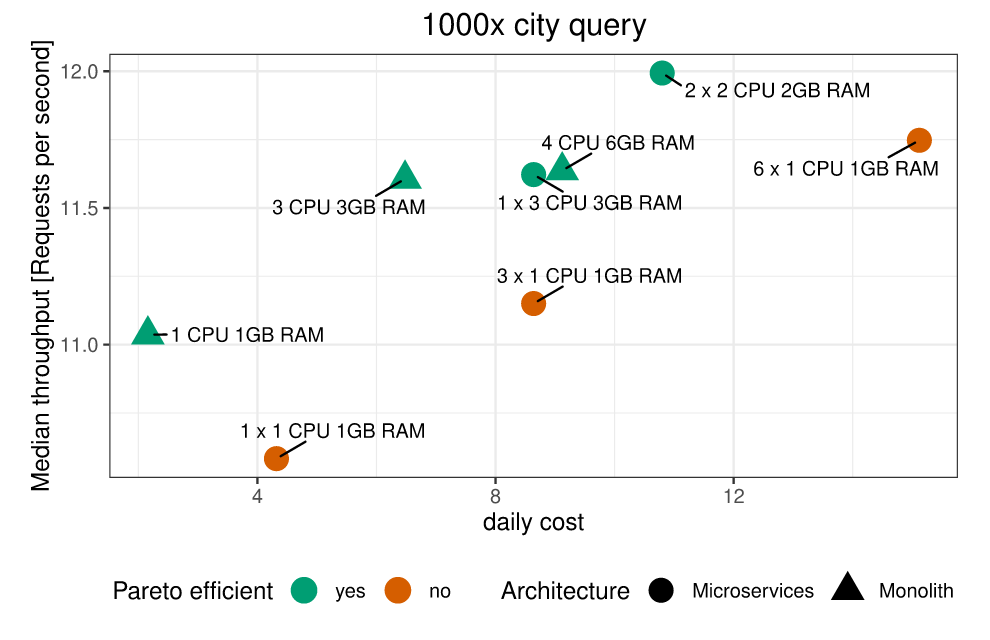

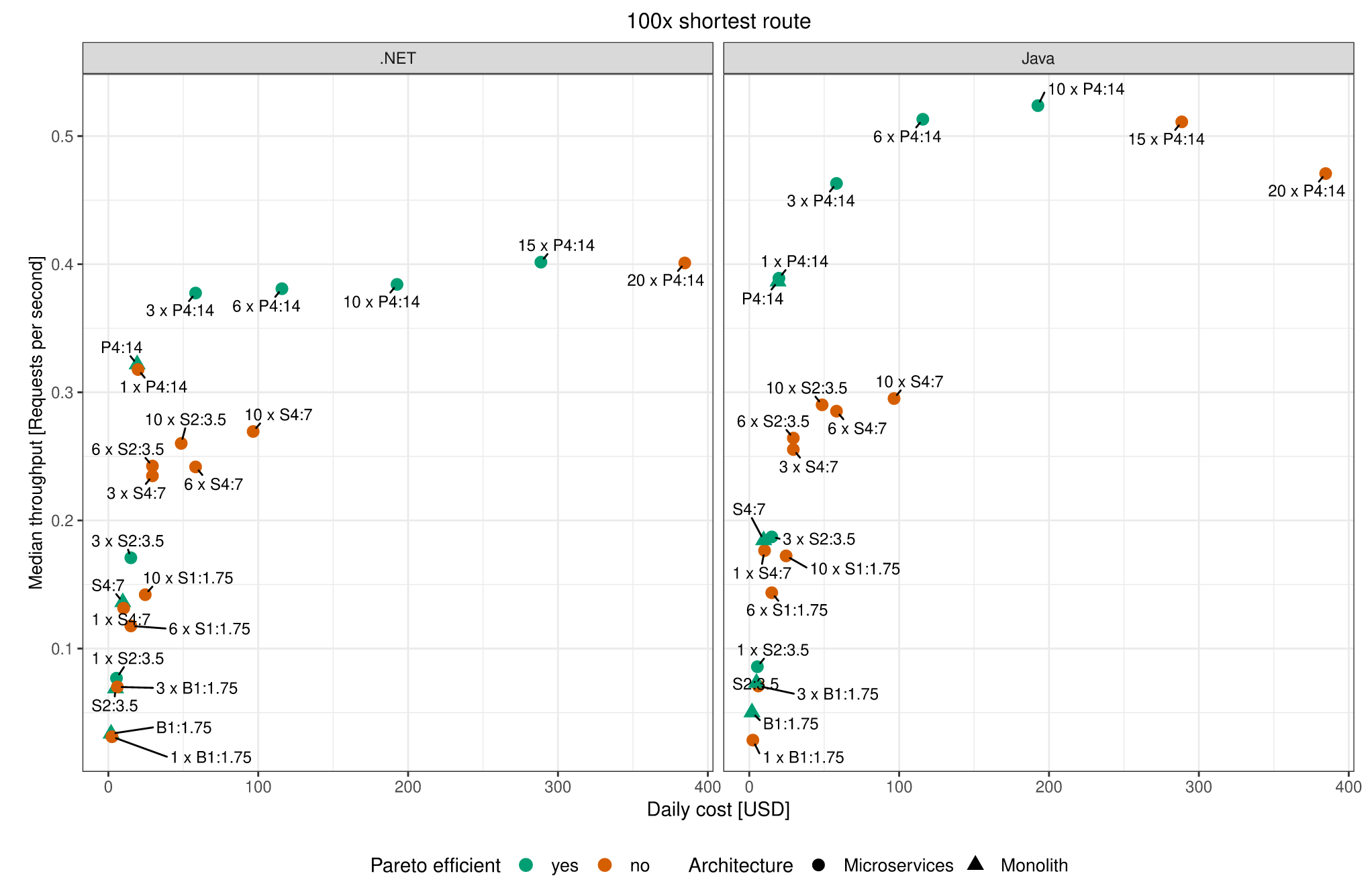

Application daily cost vs. performance is shown in figure 6, where the pricing was calculated according to data presented in Table 2. Here, and in subsequent similar cases, we always use the obtained throughput’s median for a cost comparison. On this and subsequent figures, Pareto dominated configurations marked in red relate to cases where the same or better performance can be achieved by a cheaper Pareto efficient – configuration. Comparing the costs, we can conclude that in the Spring Cloud environment, horizontal scaling of low-performance machines (1 CPU & 1 GB RAM) always leads to Pareto dominated configurations.

fiGURE 6. Throughput and cost in the Azure spring cloud environment—city application.

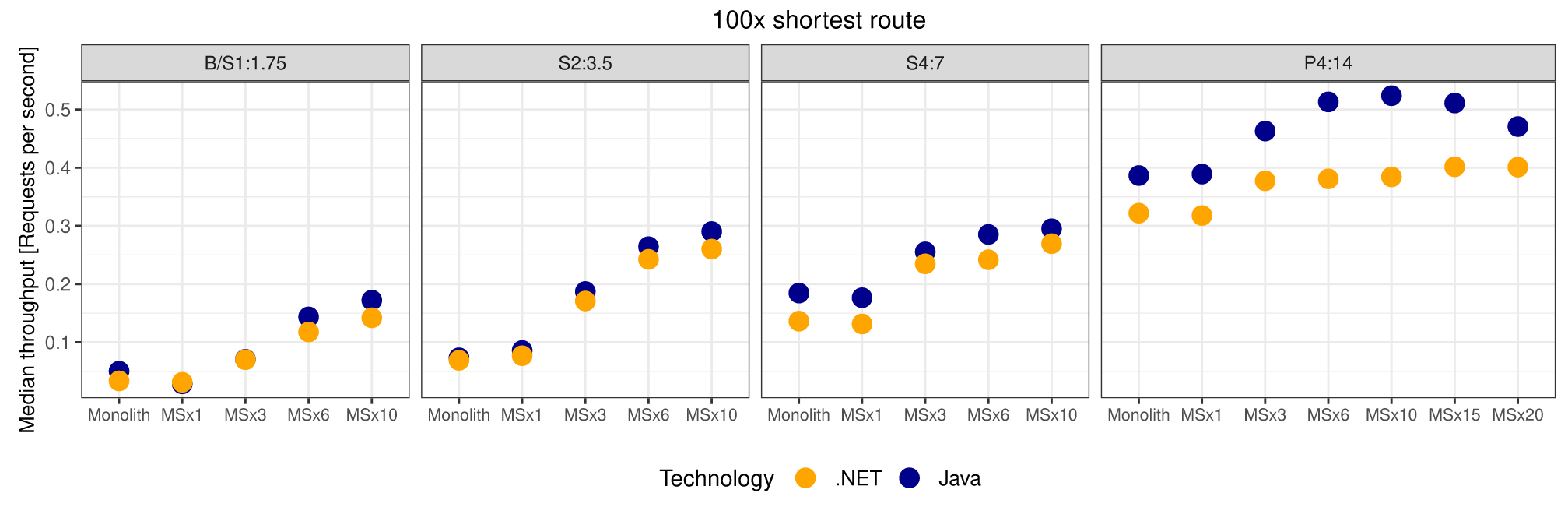

Comparing these results to the previous ones, we can conclude that in the case of computationally intensive applications, positive results of horizontal scaling are more apparent – throughput scales almost linearly with the number of instances. Also, there is no substantial performance difference between monolithic and microservice applications with the same CPU resources.

Route service daily cost to performance ratio is shown in figure 8. In this case, three microservice configurations were Pareto dominated, whereas their resource-corresponding monolith versions were Pareto efficient. It is worth noting that MSx6 1 CPU & 1 GB RAM turned out to be the most powerful configuration, but MSx2 2 CPU & 2 GB RAM and monolithic version with 4 CPU & 6 GB RAM delivered only slightly lower performance with substantially lower cost.

FIGURE 8. Throughput and cost in the Azure spring cloud environment—route service.

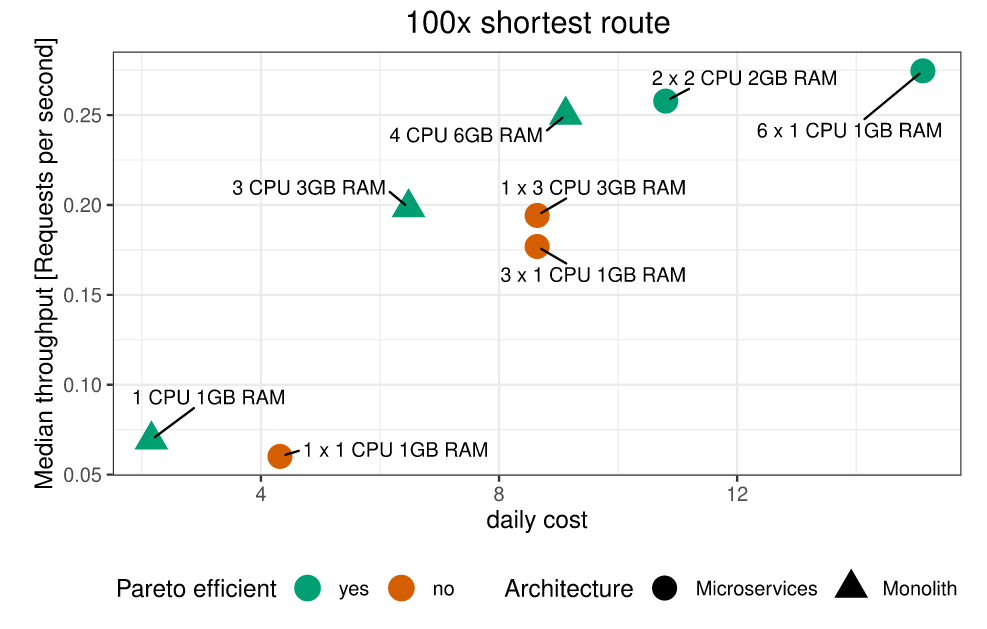

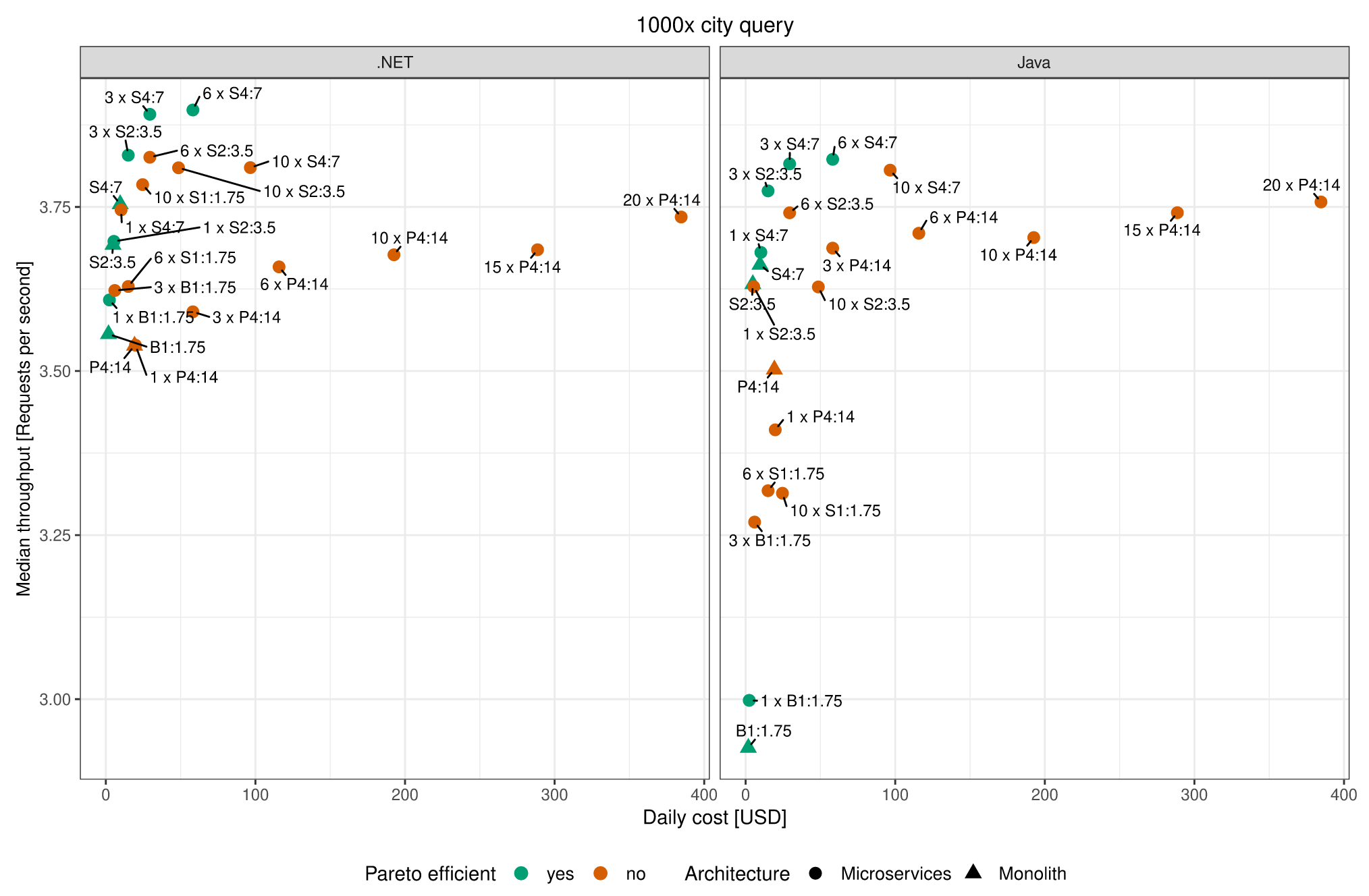

Comparing the costs of running the City service in different configurations, we can conclude that Pareto efficient configuration variants for .NET were: monolithic B1:1.75, S2:3.5, S4:7 and microservice-based:

B1:1.75, 1 x S2:3.5, 3 x S2:3.5, 3 x S4:7 and 6 x S4:7. In the case of Java, an almost identical set of configurations with an addition of 1 x S4:7 was Pareto efficient.

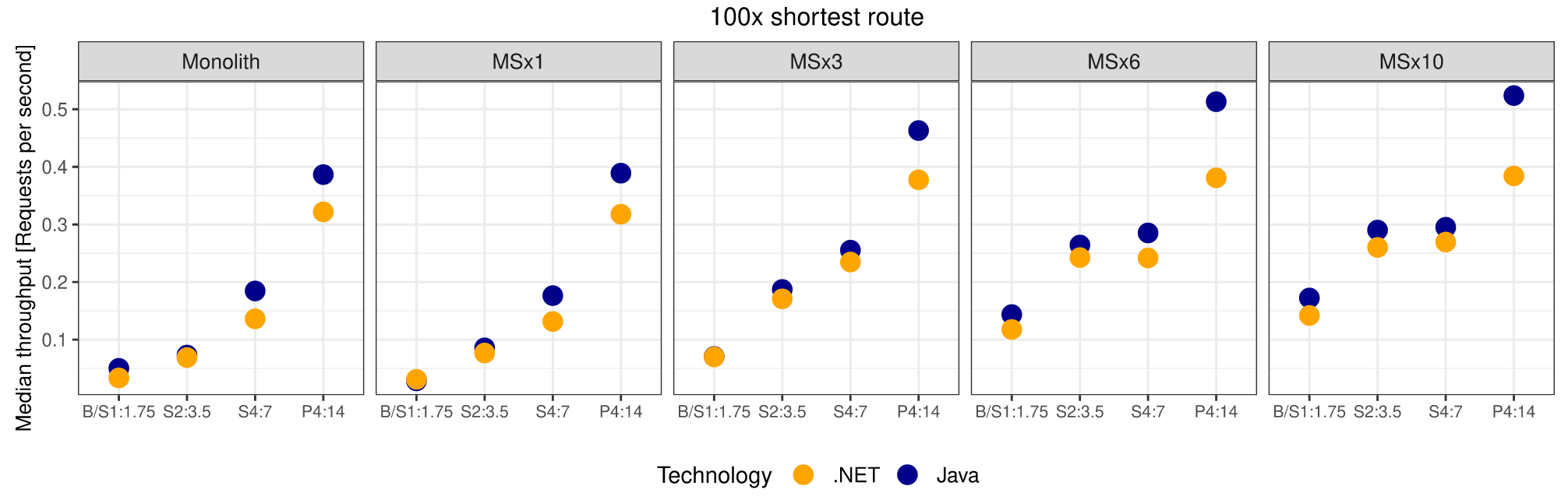

We can also observe an interesting trend: for low-power configuration, throughput is similar for .NET and Java variants, but as the power of the virtual machine is increased, Java variant shows large performance gain over .NET – compare results for S4:7 and P4:14 on figure 14. Java also exhibits better vertical scaling – see results for P4:14 on figure 15.

Comparing the costs for the Route service we can conclude that Pareto efficient configuration variants were: monolithic B1:1.75, S2:3.5, S4:7 and P4:14 as well as microservicebased: 1 × S2:3.5 i 3 × S2:3.5, 3 × P4:14, 6 × P4:14, 10 × P4:14. In the case of Java: an almost identical set of configurations with an addition of 1 × S4:7 - both for .NET and Java. Additionally, 15 × P4:14 may be selected for microservice .NET and 1 × P4:14 for microservice Java. Almost all high-power configurations (except for 20 × P4:14) are Pareto efficient – this is in contrast to the City test where high-power configurations were Pareto dominated.

On a single machine, the monolith performs better than the microservices (see figure 4) because of the additional overhead of request passing between microservice components. Note that this difference is not noticeable in our cloud benchmarks because the application gateway was deployed on a separate virtual machine, which relieved the primary machine that hosted microservices. Thereby, as for both cloud experiments, rather than comparing the performance of both architectures running on the same VM type, we must compare the performance of the architectures hosted on configurations that have similar infrastructure costs. When such comparisons are made, the monolith outperforms the microservices (see figures 6, 8, 13, and 18), even though the latter was still privileged, because when testing the performance, only one service was running (for details see Section IV-D).

FIGURE 13. Throughput and cost in the Azure app service environment—city service.

FIGURE 14. Throughput vs. horizontal scaling in the Azure app service environment—route service.

The monolith scaled vertically was Pareto efficient in servicing both simple and complex requests on all hardware configurations except P4:14 regardless of the implementation technology. Also note, that all experimental runs executed on P4:14 turned out to be Pareto dominated. Likewise, scaling up the microservice-based application performed well and was more cost-efficient than horizontal scaling. Nevertheless, vertical scaling was limited by the most powerful VM available; therefore the best performance was achieved by the microservice architecture that was both vertically and horizontally scaled.

FIGURE 15. Throughput vs. vertical scaling in the Azure app service environment—route service.

Furthermore, with microservice architecture, horizontal scaling effects differed significantly in the case of simple and complex services. Concerning the number of instances, in the case of the simple service, top performance was achieved with a smaller number of virtual machines than in the complex service. There is a visible cap on horizontal scaling for both service types, where the further increase of the number of instances does not improve and may even degrade performance. This effect of over-scaling manifests itself when CPU overhead resulting from load distribution exceeds the benefits of increasing the total processing power.

FIGURE 18. Throughput and cost in the Azure app service environment—route service.

In the selection of the technology platform, characteristics of network load vs. CPU load should be taken into account – for intensive network services with a low CPU load .NET is a better choice than Java; on the other hand, Java consistently proved to utilize CPU better in computation-intensive services. .NET also better utilizes cheap hardware with lower computational capacity as long as the computation is not intensive (see the first plot on figure 10). On the other hand, The Java platform makes better use of powerful machines in the case of computation-intensive services (see the last plot on figure 15).

In conclusion, a microservice architecture is not the best suited for every context. A monolithic architecture seems to be a better choice for simple, small-sized systems that do not have to support a large number of concurrent users.