An Open-Source Benchmark Suite for Microservices and Their Hardware-Software Implications for Cloud & Edge Systems

Status:: 🟩

Links:: Microservices Reference and Benchmark Applications

Metadata

Authors:: Gan, Yu; Zhang, Yanqi; Cheng, Dailun; Shetty, Ankitha; Rathi, Priyal; Katarki, Nayan; Bruno, Ariana; Hu, Justin; Ritchken, Brian; Jackson, Brendon; Hu, Kelvin; Pancholi, Meghna; He, Yuan; Clancy, Brett; Colen, Chris; Wen, Fukang; Leung, Catherine; Wang, Siyuan; Zaruvinsky, Leon; Espinosa, Mateo; Lin, Rick; Liu, Zhongling; Padilla, Jake; Delimitrou, Christina

Title:: An Open-Source Benchmark Suite for Microservices and Their Hardware-Software Implications for Cloud & Edge Systems

Date:: 2019

Publisher:: Association for Computing Machinery

URL:: https://dl.acm.org/doi/10.1145/3297858.3304013

DOI:: 10.1145/3297858.3304013

Gan, Y., Zhang, Y., Cheng, D., Shetty, A., Rathi, P., Katarki, N., Bruno, A., Hu, J., Ritchken, B., Jackson, B., Hu, K., Pancholi, M., He, Y., Clancy, B., Colen, C., Wen, F., Leung, C., Wang, S., Zaruvinsky, L., … Delimitrou, C. (2019). An Open-Source Benchmark Suite for Microservices and Their Hardware-Software Implications for Cloud & Edge Systems. Proceedings of the Twenty-Fourth International Conference on Architectural Support for Programming Languages and Operating Systems, 3–18. https://doi.org/10.1145/3297858.3304013

Cloud services have recently started undergoing a major shift from monolithic applications, to graphs of hundreds or thousands of loosely-coupled microservices. Microservices fundamentally change a lot of assumptions current cloud systems are designed with, and present both opportunities and challenges when optimizing for quality of service (QoS) and cloud utilization. In this paper we explore the implications microservices have across the cloud system stack. We first present DeathStarBench, a novel, open-source benchmark suite built with microservices that is representative of large end-to-end services, modular and extensible. DeathStarBench includes a social network, a media service, an e-commerce site, a banking system, and IoT applications for coordination control of UAV swarms. We then use DeathStarBench to study the architectural characteristics of microservices, their implications in networking and operating systems, their challenges with respect to cluster management, and their trade-offs in terms of application design and programming frameworks. Finally, we explore the tail at scale effects of microservices in real deployments with hundreds of users, and highlight the increased pressure they put on performance predictability.

Notes & Annotations

Color-coded highlighting system used for annotations

📑 Annotations (imported on 2024-03-21#18:25:06)

The richer the functionality of cloud services becomes, the more the modular design of microservices helps manage system complexity. They similarly facilitate deploying, scaling, and updating individual microservices independently, avoiding long development cycles, and improving elasticity.

Even though modularity in cloud services was already part of the Service-Oriented Architecture (SOA) design approach [77], the fine granularity of microservices, and their independent deployment create hardware and software challenges different from those in traditional SOA workloads.

The DeathStarBench suite 1 includes six end-toend services that cover a wide spectrum of popular cloud and edge services: a social network, a media service (movie reviewing, renting, streaming), an e-commerce site, a secure banking system, and Swarm; an IoT service for coordination control of drone swarms, with and without a cloud backend.

Finally, to track how user requests progress through microservices, we have developed a lightweight and transparent to the user distributed tracing system, similar to Dapper [76] and Zipkin [17] that tracks requests at RPC granularity, associates RPCs belonging to the same end-to-end request, and records traces in a centralized database. We study both traffic generated by real users of the services, and synthetic loads generated by open-loop workload generators.

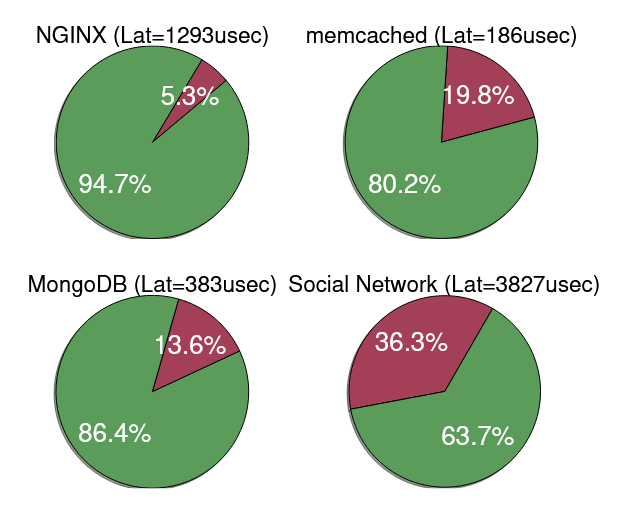

Fig. 3 shows the breakdown of execution time to network (red) and application processing (green) for three monolithic services (NGINX, memcached, MongoDB) and the end-to-end Social Network application

Unlike monolithic services though, microservices spend much more time sending and processing network requests over RPCs or other REST APIs.

While for the single-tier services only a small amount of time goes towards network processing, when using microservices, this time increases to 36.3% of total execution time, causing the system’s resource bottlenecks to change drastically.

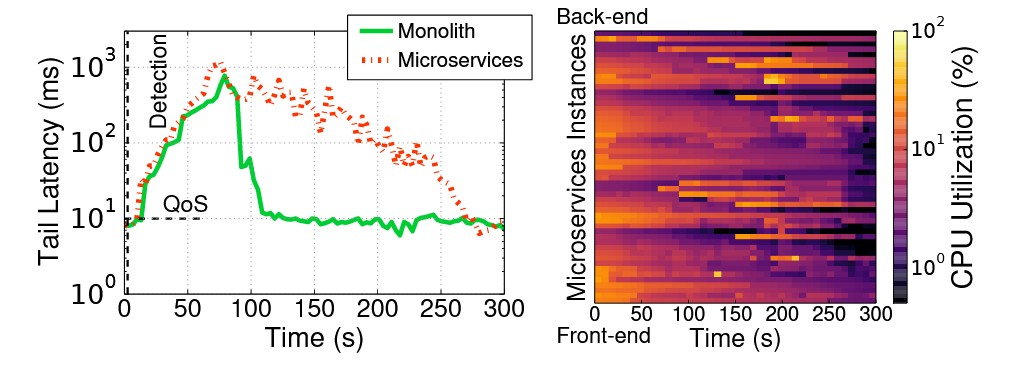

Third, microservices significantly complicate cluster management. Even though the cluster manager can scale out individual microservices on-demand instead of the entire monolith, dependencies between microservices introduce backpressure effects and cascading QoS violations that quickly propagate through the system, making performance unpredictable.

Finally, the fact that hotspots propagate between tiers means that once microservices experience a QoS violation, they need longer to recover than traditional monolithic applications, even in the presence of autoscaling mechanisms, which most cloud providers employ.

Finally, we compare the impact of slow servers in clusters of equal size for the monolithic design of Social Network. In this case goodput is higher, even as cluster sizes grow, since a single slow server only affects the instance of the monolith hosted on it, while the other instances operate independently.

In general, the more complex an application’s microservices graph, the more impactful slow servers are, as the probability that a service on the critical path will be degraded increases.