Workload characterization for microservices

Status:: 🟩

Links:: Microservices vs. Monolith

Metadata

Authors:: Ueda, Takanori; Nakaike, Takuya; Ohara, Moriyoshi

Title:: Workload characterization for microservices

Date:: 2016

URL:: http://ieeexplore.ieee.org/document/7581269/

DOI:: 10.1109/IISWC.2016.7581269

Ueda, T., Nakaike, T., & Ohara, M. (2016). Workload characterization for microservices. 2016 IEEE International Symposium on Workload Characterization (IISWC), 1–10. https://doi.org/10.1109/IISWC.2016.7581269

The microservice architecture is a new framework to construct a Web service as a collection of small services that communicate with each other. It is becoming increasingly popular because it can accelerate agile software development, deployment, and operation practices. As a result, cloud service providers are expected to host an increasing number of microservices that can generate significant resource pressure on the cloud infrastructure. We want to understand the characteristics of microservice workloads to design an infrastructure optimized for microservices. In this paper, we used Acme Air, an open-source benchmark for Web services, and analyzed the behavior of two versions of the benchmark, microservice and monolithic, for two widely used language runtimes, Node.js and Java. We observed a significant overhead due to the microservice architecture; the performance of the microservice version can be 79.2% lower than the monolithic version on the same hardware configuration. On Node.js, the microservice version consumed 4.22 times more time in the libraries of Node.js than the monolithic version to process one user request. On Java, the microservice version also consumed more time in the application server than the monolithic version. We explain these performance differences from both hardware and software perspectives. We discuss the network virtualization in Docker, an infrastructure for microservices that has nonnegligible impact on performance. These findings give clues to develop optimization techniques in a language runtime and hardware for microservice workloads.

Notes & Annotations

Color-coded highlighting system used for annotations

📑 Annotations (imported on 2024-03-23#21:00:37)

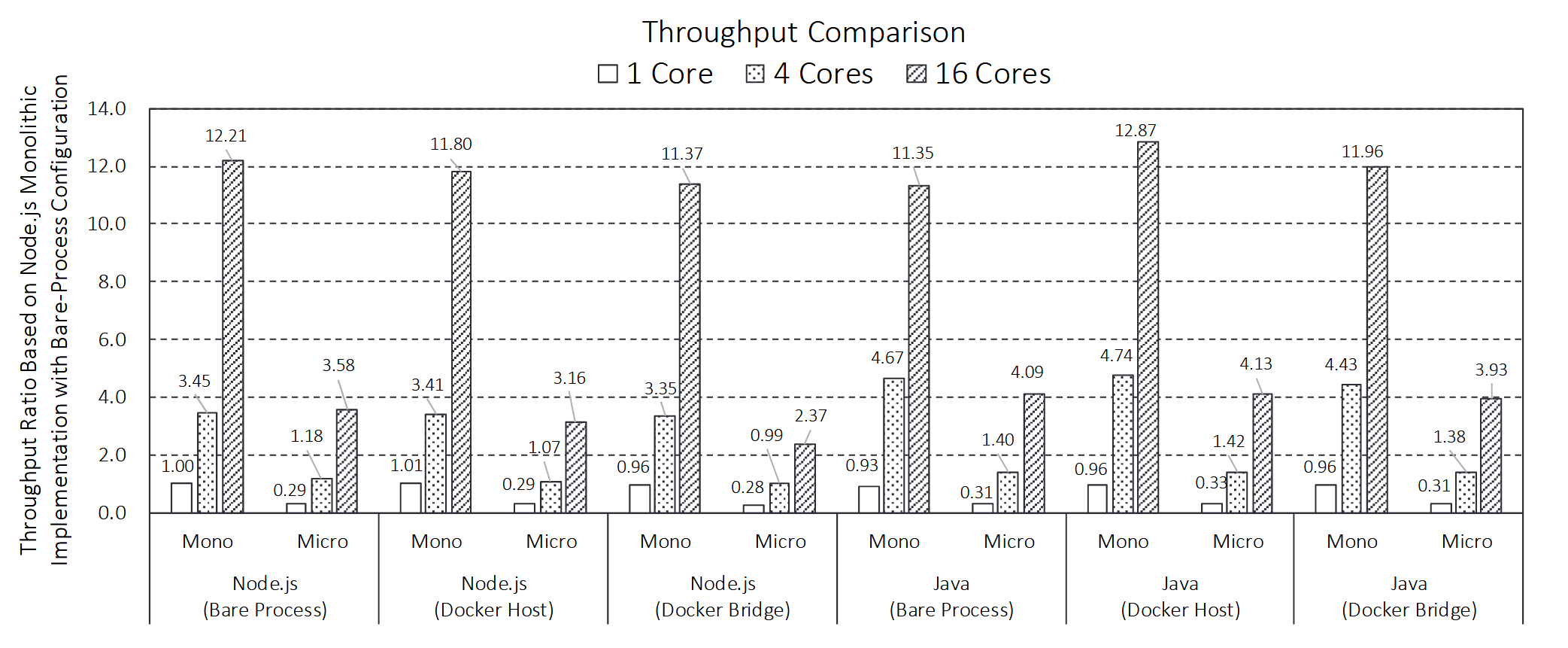

In comparison with the monolithic implementations, the Node.js microservice implementation degraded throughput up to 79.2% and the Java microservice implementation degraded throughput up to 70.2%. This is a noticeably worse performance penalty than previously reported [3]. From this fact, we argue that Web-service developers should carefully consider the impact on performance before transforming a monolithic implementation into a microservice implementation.

The Docker network configurations exhibited non- negligible impact on performance. The bridge network that Docker uses as default exhibited up to 33.8% performance degradation in the Node.js implementations compared to the bare-process configuration. As expected, the Docker-host configuration always exhibited better performance than the Docker-bridge configuration because Docker host uses the interface of the host machine without virtualization. However, when we use the host interface, applications may cause a conflict of network ports if they use the same port. From this performance trend, we argue that developers should select an appropriate Docker network configuration depending on whether the developers have to avoid port conflicts.

There are other interesting performance results. Java exhibited better performance than Node.js on the 4 and 16 cores, except the 16-core bare-process configuration. Java exhibited super-linear scalability on the four cores. On the contrary, Node.js outperformed Java on the single core.

Throughput comparison among Node.js and Java implementations with Bare-process, Docker-host, and Docker-bridge experimental configurations. Values are relative ratios of throughput based on throughput of Node.js monolithic implementation with bare-process configuration.

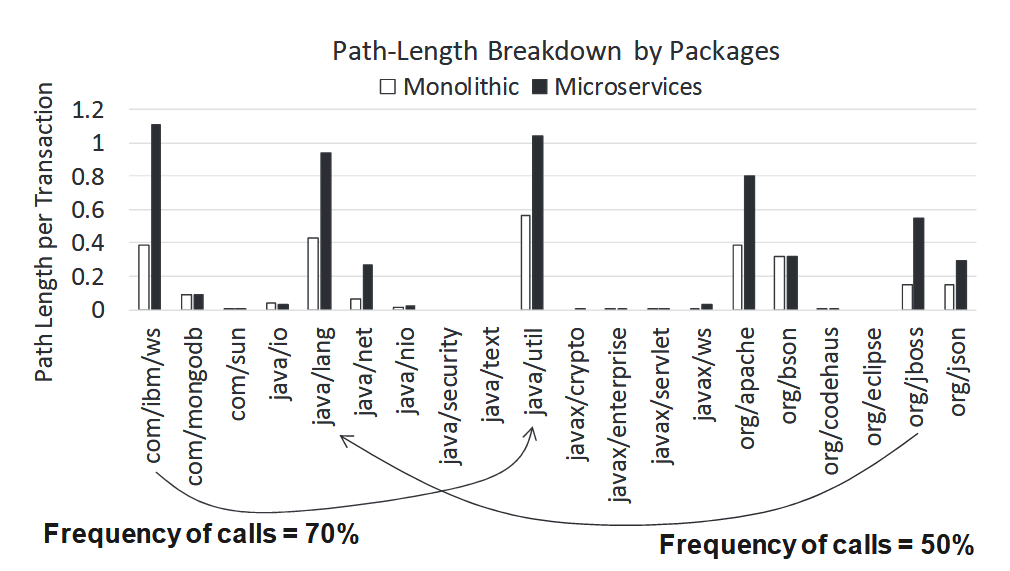

The results show that the Liberty layer is the main time-consuming part of the implementations. The com.ibm.ws package includes the main implementation of Liberty Core. The java.util package also generated a long path because the application server uses java.util package intensively. The org.jboss package consumed much more time. This means the communication of the microservice architecture caused the major bottleneck.

Breakdown of Java implementations

Even though microservices can accelerate agile developments, we must recognize the negative impact on performance.